Abstract

While deep learning models have become the predominant method for medical image segmentation, they are typically not capable of generalizing to unseen segmentation tasks involving new anatomies, image modalities, or labels.

Given a new segmentation task, researchers have to train or fine-tune models, which is time-consuming and poses a substantial barrier for clinical researchers, who often lack the resources and expertise to train neural networks.

In this paper, we present UniverSeg, a method for solving unseen medical segmentation tasks without additional training.

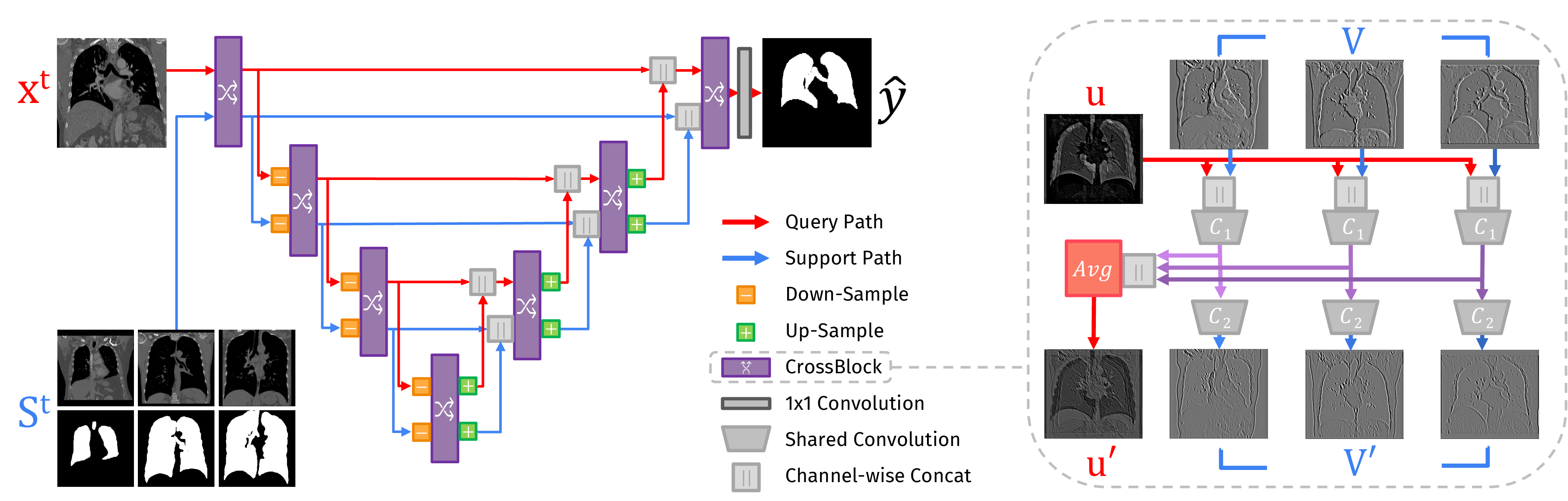

Given a query image and support set of image-label pairs that define a new segmentation task, UniverSeg employs a novel CrossBlock mechanism to produce accurate segmentations without the need for additional training. To achieve strong generalization to new tasks, we have gathered, standardized, and trained on a collection of 53 open-access medical segmentation datasets with over 22,000 scans, which we refer to as MegaMedical. We used this collection to train UniverSeg on a diverse set of anatomies and imaging modalities.

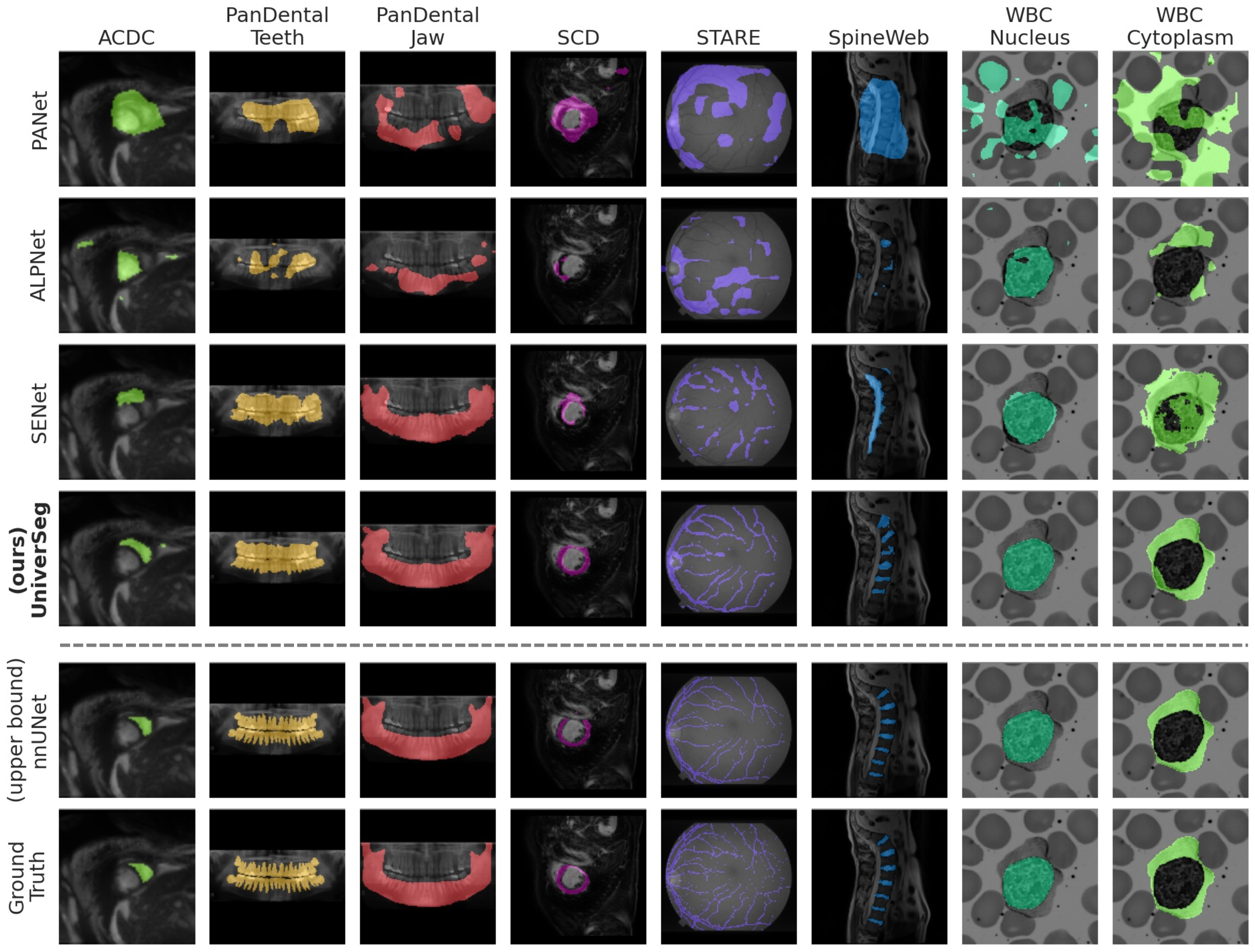

We demonstrate that UniverSeg substantially outperforms several related methods on unseen tasks, and thoroughly analyze and draw insights about important aspects of our system.

Citation

If you find our work or any of our materials useful, please cite our paper:

@article{butoi2023universeg,

title={UniverSeg: Universal Medical Image Segmentation},

author={Victor Ion Butoi* and Jose Javier Gonzalez Ortiz* and Tianyu Ma and Mert R. Sabuncu and John Guttag and Adrian V. Dalca},

journal={International Conference on Computer Vision},

year={2023}

}